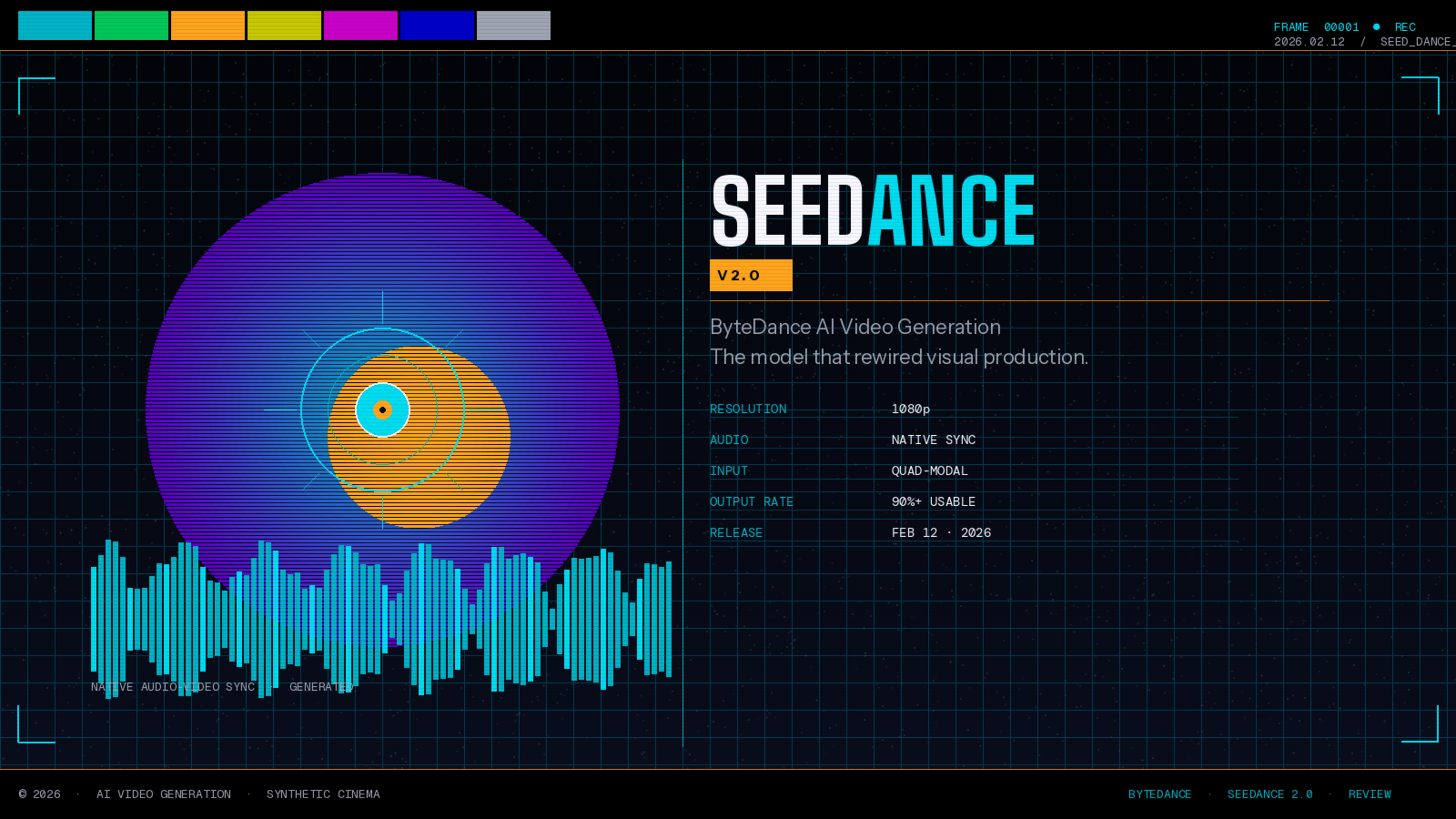

Seedance 2.0 Review: ByteDance’s AI Video Tool Is Reshaping the Future of Content Creation

The AI video generation space just had its biggest shakeup of 2026. On February 12, ByteDance — the company behind TikTok and CapCut — officially launched Seedance 2.0, a next-generation AI video model that’s sending shockwaves through the creative, marketing, and film industries. Within hours of release, it was trending globally, sparking viral reactions from content creators, Hollywood studios, and AI researchers alike.

If you haven’t heard of Seedance 2.0 yet, this guide is everything you need to know — what it does, how it compares to competitors, who it’s for, and why it matters for content creators and marketers in 2026.

What Is Seedance 2.0? ByteDance’s Answer to Sora and Veo

Seedance 2.0 is ByteDance’s latest AI video generation model — and it’s not a minor update. It represents a fundamental architectural leap over its predecessor, Seedance 1.5 Pro, introducing what ByteDance calls a “unified multimodal audio-video joint generation architecture.” In plain terms: this tool can take text, images, video clips, and audio all at once, and turn them into polished, cinematic short-form video — with synchronized audio baked in from the start.

The model currently supports video generation up to 15 seconds in length at up to 1080p resolution, and it’s available on ByteDance’s Jimeng AI and Doubao platforms for Chinese users, with a global rollout via CapCut expected soon.

What makes Seedance 2.0 stand out from the crowd isn’t just one feature — it’s the combination of capabilities that, until now, required multiple tools and hours of post-production work.

Seedance 2.0 Features: What Makes This AI Video Tool Different

At its core, Seedance 2.0 is built around a “quad-modal” input system — meaning it accepts up to 9 images, 3 video clips, and 3 audio files alongside your text prompt, all in a single generation request. This is a level of reference control that competitors like Runway, Pika, and even Sora 2 don’t currently offer in one unified workflow.

Here’s what the Seedance 2.0 feature set looks like in practice:

Native Audio-Video Generation: Unlike most AI video tools that produce visuals and leave you to add audio separately, Seedance 2.0 generates synchronized dialogue, sound effects, and background music as part of the same process. This alone eliminates hours of post-production.

Multi-Shot Storytelling: Instead of generating one isolated clip, Seedance 2.0 autonomously plans camera language — establishing shots, medium frames, close-ups — and sequences them into a coherent narrative. ByteDance describes this as “director-level thinking” built directly into the model.

@ Reference System: Users can tag specific uploaded files in their text prompt (e.g., “@Video1 for camera movement,” “@Image1 as first frame”) to precisely direct how each asset influences the output. This gives creators a level of specificity comparable to instructing a real film crew.

Character Consistency: One of the biggest pain points in AI video has always been “character drift” — when a character’s face or clothing changes mid-scene. Seedance 2.0 addresses this directly with locked character consistency across multi-shot sequences, making it practical for storytelling and brand video production.

90%+ Usable Output Rate: Where traditional AI video tools like earlier Runway or Pika models yielded roughly 20% usable outputs (requiring multiple retries), Seedance 2.0 reportedly delivers usable results on the first generation over 90% of the time — a fundamental shift from experimental to industrial-grade production.

How Seedance 2.0 Compares to Sora 2, Veo 3, and Kling 3.0

The AI video generation market in 2026 is intensely competitive. Here’s how Seedance 2.0 stacks up against the main rivals:

Seedance 2.0 vs. Sora 2 (OpenAI): Sora 2 still leads on physics simulation and long-take coherence — it produces the most physically believable motion for single extended sequences. However, Seedance 2.0 beats it on workflow integration, native audio, and multimodal reference control. Sora 2 also carries a higher compute cost per generation.

Seedance 2.0 vs. Veo 3.1 (Google): Google’s Veo 3.1 is praised for color science and broadcast-quality output, making it strong for professional film and TV workflows. But Seedance 2.0 is faster for short cinematic shots and more accessible via its CapCut and Doubao integrations — platforms with hundreds of millions of existing users.

Seedance 2.0 vs. Kling 3.0 (Kuaishou): Kling 3.0 is the budget-friendly speed option — great for quick prototypes. Seedance 2.0 is slower by comparison but delivers meaningfully better quality, consistency, and audio capabilities for production work.

The consensus among early testers: Seedance 2.0 is the most workflow-friendly AI video tool currently available, especially for content creators and marketing teams who need repeatable, high-quality short-form video at scale.

Who Should Use Seedance 2.0? The Best Use Cases for Creators and Marketers

Seedance 2.0 isn’t a toy — it’s built for real production workflows. Here are the primary use cases where it delivers the most value:

Content Creators and Social Media Teams: The tight integration with CapCut (coming globally) means creators can go from idea to finished video without leaving an app they already use. The native audio-video sync makes it ideal for TikToks, Reels, and YouTube Shorts.

Marketing and Advertising Agencies: The ability to lock brand colors, maintain character consistency, and reference existing campaign materials makes Seedance 2.0 genuinely useful for ad production. Early estimates suggest AI-generated ad content with this tool could cut VFX costs dramatically compared to traditional production.

Filmmakers and Storyboard Artists: Seedance 2.0’s multi-shot planning and director-level camera sequencing make it an exceptional previsualization tool. You can sketch out an entire scene from a storyboard prompt in minutes.

Game Developers: Complex multi-character action scenes — previously a major weakness of AI video tools — are a stated strength of Seedance 2.0. ByteDance demonstrated the model generating synchronized pair figure skating with multiple mid-air spins, which is exactly the kind of complex motion that matters for game cinematics.

The Seedance 2.0 Controversy: Copyright, Hollywood, and Ethical Concerns

It wouldn’t be a complete Seedance 2.0 review without addressing the elephant in the room. The tool’s launch immediately triggered one of the most intense AI copyright controversies in recent memory.

Within a day of launch, users were generating viral videos featuring Tom Cruise fighting Brad Pitt, Disney characters like Spider-Man and Darth Vader, and scenes that were nearly indistinguishable from licensed intellectual property. The Motion Picture Association issued a statement from CEO Charles Rivkin calling for ByteDance to cease activity it described as unauthorized use of copyrighted works on a massive scale.

Disney sent a cease-and-desist letter accusing ByteDance of what it called a “virtual smash-and-grab” of its IP. Paramount followed suit shortly after. SAG-AFTRA, Hollywood unions, and the Human Artistry Campaign condemned the tool publicly.

ByteDance responded quickly with emergency policy changes banning real human face uploads as reference material — a reaction triggered after a demo showed the AI generating a photorealistic digital clone of a tech influencer from just static photos, without any voice samples or motion capture data.

The Deadpool screenwriter Rhett Reese summarized the mood of many in Hollywood with a single post: “It’s likely over for us.”

The guardrails question is not unique to Seedance 2.0 — Sora 2 faces similar criticisms — but the speed and scale at which ByteDance’s tool generated copyrighted content set a new level of urgency around the debate. It’s a reminder that every powerful AI tool comes with real ethical and legal questions that the industry is still working to answer.

How to Access Seedance 2.0 Today

Seedance 2.0 is currently available to users in China through ByteDance’s Jimeng AI and Doubao platforms. A free tier exists with limited daily credits, supporting 720p output. Higher resolution (1080p) and priority access are tied to paid tiers.

For global users, ByteDance has confirmed that Seedance 2.0 will be available through CapCut — though an exact international rollout date hasn’t been confirmed as of late February 2026. Developers can also access the model through an API via Volcano Engine, ByteDance’s cloud services platform.

Given ByteDance’s track record with CapCut — which already has hundreds of millions of active users worldwide — the global rollout is likely to be fast and widely adopted.

Seedance 2.0 and the Future of AI Video Generation

The launch of Seedance 2.0 marks a genuine inflection point in AI video. The technology has crossed from “impressive demo” territory into something that production teams can seriously consider integrating into their workflows. The 90%+ first-try usability rate, native audio, and multimodal reference system are not incremental improvements — they’re the features that close the gap between AI video and traditional video production.

For SEO and content marketing professionals specifically, this matters for a straightforward reason: video is already the dominant content format, and tools like Seedance 2.0 are making high-quality video creation accessible to teams and individuals who previously couldn’t afford it. That shifts competitive dynamics, raises the content quality floor, and creates new opportunities for brands willing to move fast.

The copyright and ethical questions are real and unresolved. But so is the momentum. Seedance 2.0 is worth watching closely — and if you’re in the content creation space, probably worth testing the moment it becomes globally available.

Key Takeaways

- Seedance 2.0 launched February 12, 2026, built by ByteDance

- Supports quad-modal input: text, up to 9 images, 3 videos, 3 audio files in one generation

- Native audio-video synchronization — no separate audio step needed

- Multi-shot storytelling with automatic camera sequencing

- 90%+ usable output rate vs. ~20% for older tools

- Currently available on Jimeng AI and Doubao; global CapCut rollout coming soon

- Facing copyright controversy from Disney, Paramount, MPA, and SAG-AFTRA

- Competitors: Sora 2 (OpenAI), Veo 3.1 (Google), Kling 3.0 (Kuaishou)

Stay updated on the latest AI tools and SEO strategies by bookmarking this blog.

Leave a Reply

View Comments